With so much hype around LLMs, many look for ways to apply them to every aspect of the translation process. And LLMs do exhibit impressive capabilities across a wide spectrum of tasks. However, the use of LLMs for less complex tasks may not be necessary.

There are many areas of the translation process where the use of large language models (LLMs) should be considered, but in this blog we’ll talk specifically about LLMs use for natural language processing (NLP) tasks.

Natural language processing in translation

Long before LLMs came into the picture, NLP significantly advanced the field of translation. With the aid of sophisticated algorithms and neural networks, NLP facilitates accurate and contextually nuanced translations. It also enables technology that makes translation faster, more affordable, and more accurate.

Machine Translation (MT) is the most well-known use of natural language processing. It plays a crucial role in overcoming language obstacles and ensuring smooth communication across different countries and societies. However, NLP is also employed in various other tasks such as text summarization, tokenization, and part of speech tagging.

NLP tasks have historically been performed by NLP frameworks. However, LLMs like the GPT family, PaLM, Claude, and Jurassic are showing remarkable promise in some of these areas. Assessment plays a vital role in deciding the appropriate time to utilize the potential of LLMs or depend on existing NLP frameworks that are not LLM-based.

LLMs vs. NLP Frameworks: Is one better than the other?

LLMs shine in tasks demanding a nuanced understanding of context, proficiency in generating human-like text, and adept handling of complex language structures. Their ability to comprehend nuances enables the generation of coherent and contextually relevant responses, making them indispensable in applications like conversational AI.

Conversely, specialized NLP frameworks, such as Stanford Stanza and Spacy, are tailor-made for efficiency in specific tasks that may not necessarily require the deep learning capabilities of LLMs. Tasks with well-defined rules and detailed linguistic annotations—such as tokenization, part-of-speech tagging, named entity recognition, and dependency parsing—are areas where these frameworks continue to excel.

Due to their optimized and controlled architectures, these NLP frameworks match or even outperform LLMs in tasks requiring detailed linguistic analysis and structured information extraction. In scenarios that require streamlined and computationally efficient solutions, opting for an NLP framework might prove less costly and more practical than deploying resource-intensive LLMs.

An ROI-based assessment of NLP frameworks vs. LLMs

One way to make the decision about whether to use LLMs or NLO frameworks is through evaluation of return on investment. Since LLMs are currently more costly to maintain from a resource perspective, it’s an important element to consider in addition to performance. As LLMs improve and become more specialized they may also become more economical in situations where NLP frameworks are currently deployed.

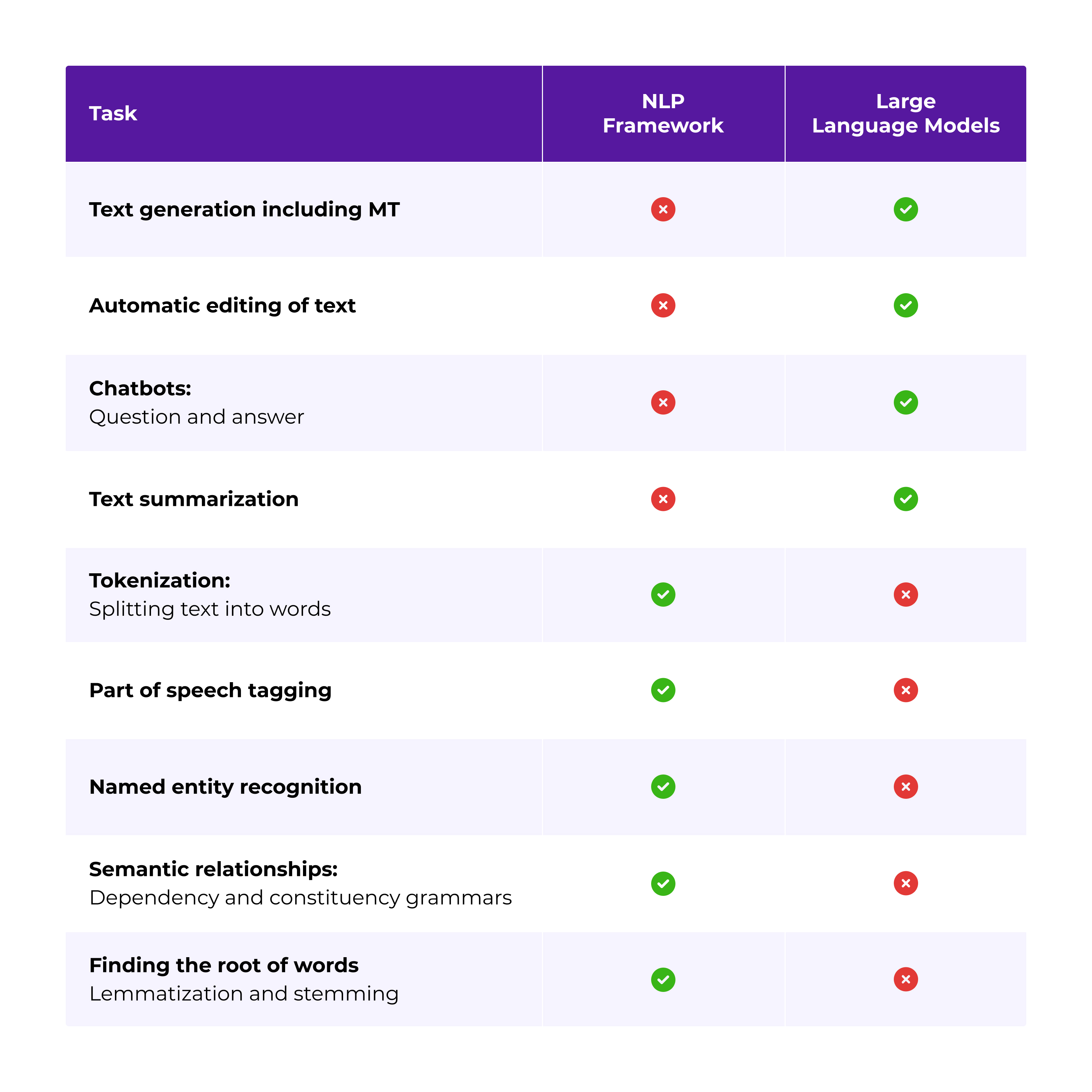

The table below provides guidance on which NLP tasks are more suited for NLP frameworks and which are more suited for LLMs. This is based on internal evaluation by Smartling’s AI team.

Maximizing your outcomes with LLMs

Achieving a balanced and effective approach to language processing means leveraging the synergy between LLMs and specialized NLP frameworks. While LLMs bring sophistication to tasks demanding context-aware responses, specialized NLP frameworks remain pivotal in tasks requiring precision, speed, and a deep understanding of linguistic structures at a lower computational cost. The future of NLP lies in strategically integrating these tools to address the multifaceted challenges presented by the ever-expanding realm of language understanding.

Want to learn more about how Smartling is deploying LLMs in our translation solutions? Get in touch.