Especially if you’ve had a human-driven translation process for some time, you may wonder if machine translation (MT) can produce comparable results in terms of quality. Let’s talk about the overall accuracy of MT, how machine translation quality is assessed, and where MT and MT quality estimation are headed.

What is the accuracy of machine translation?

Machine translation is fairly accurate thanks to the rise of neural networks—a method in artificial intelligence. Instead of translating nearly word-for-word, these networks consider context to produce more accurate translations. But do they come close to the human equivalent? The answer often depends on several factors:

- Your machine translation software. Some MT engines are more reliable than others in terms of translation quality, so the one you choose matters.

- Domain. Some machine translation systems are for general use, with others trained in specific industries. When translating complex terminology, such as for scientific or legal content, having an MT engine trained on your domain can make all the difference.

- Content type. Machine translation may not be as accurate for things like marketing campaigns, taglines, or slogans. These often require capturing a brand’s personality or an emotion rather than rendering an exact translation.

- Language pair. Even the best MT providers’ quality scores will vary depending on the language pair. A variety of factors can cause this, including a lack of equivalent words or phrases in the target and source languages.

All things considered, machine translation can often get you most of the way there on a translation. Human translators can then do machine translation post-editing (MTPE) to ensure accuracy and get content to a publishable state.

What is machine translation quality evaluation?

MT quality evaluation is the traditional way to assess if machine-translated text is on par with how a human would translate source text. There are a variety of evaluation metrics, including BLEU, NIST, and TER. These are used to score machine-translated segments based on their similarity to reference translations.

Reference translations are high-quality translations of the source text generated by human translators. These references are helpful, of course. However, they’re not always available—reliance on them during translation projects isn’t ideal. What’s the most effective way to evaluate quality, then? At Smartling, we use a combination of two methods.

The first is monthly, third-party multidimensional quality metrics (MQM) assessments across eight locals. These assessments are the gold standard in the industry for evaluating HT, MT, and MTPE. To assign appropriate quality scores, MQM looks at the type and severity of errors found in translated text.

Second, we leverage ongoing, real-time, automated quality assessments. These measure the end distance or translation error rate across HT, MT, and MTPE. Ultimately, these two types of evaluation enable us to offer guaranteed translation quality.

What is the importance of machine translation evaluation?

Evaluation aims to determine if a translation meets the following criteria:

- Accurate. The content should faithfully convey the message and sentiment of the original text in the target language.

- Clear. The message must be easily understandable and any instructions should be actionable and easy to follow.

- Appropriate. Certain audiences require certain levels of formality, for instance. Ensuring that translated segments show the audience due respect and don’t alienate or offend them is crucial.

A translated segment that falls short in any of these areas will require post-editing by a human translator.

As for the benefits of MT evaluation, there are several. You can use it to estimate translation costs and savings and to determine appropriate compensation for linguists. Translators can also see at a glance how much post-editing effort a piece of content will require.

Two methods of assessing machine translation quality

There are two options for evaluating machine translation:

- Manual evaluation: Human translators look at factors like fluency, adequacy, and translation errors, such as missing words and incorrect word order. The downside of this method is that each linguist may define “quality” subjectively.

- Automatic evaluation: This method involves scoring via algorithms. The algorithms use human reference translations and automatic metrics like BLEU and METEOR to judge quality. While human evaluation is more accurate on a sentence level, this method gives a bird's-eye view and is more scalable and cost-effective.

The differences: machine translation quality estimation vs. evaluation

Unlike quality evaluation, machine translation quality estimation (MTQE) doesn’t rely on human reference translations. It uses machine learning (ML) methods to learn from correlations between source and target segments. These correlations inform the estimates, which can be created at the word, phrase, sentence, or document level.

What to use MT quality estimation for

In our Reality Series episode on Machine Translation Quality Estimation, Mei Zheng, Senior Data Scientist at Smartling, gave this advice:

“If you have the resources to do automatic scoring on all of your contents, definitely do that. Then, sample some of those strings for evaluation by humans. This way, you get a baseline of what that automatic score corresponds to when a linguist sees it.”

What’s the value of setting these baselines informed by quality estimates for a wide range of content? When you also identify patterns across improperly translated strings, you can quickly and reliably judge whether machine-translated content is publishable as-is.

Factors that affect MT quality estimation scores

Automatic quality estimation is fast and cost-effective. However, as Alex Yanishevsky, Smartling’s Director of MT and AI Solutions says, “It's not going to give you the same insight as a human being would.” As discussed in the MTQE webinar, there are several reasons for this.

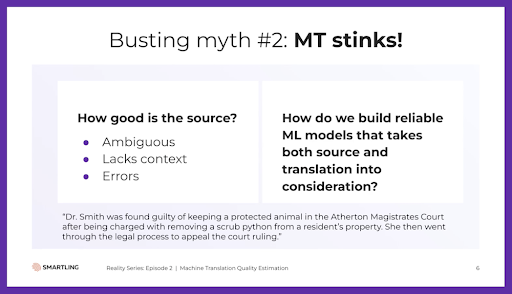

The source and its quality

There are different algorithms for quality estimation, but most don’t take the surrounding context into account, such as gender. Consider, for example, the following text: "Dr. Smith was found guilty of keeping a protected animal in the Atherton Magistrates Court after being charged with removing a scrub python from a resident's property. She then went through the legal process to appeal the court ruling.”

For accuracy, in a language like Spanish, “Doctor” would need to be translated into the feminine form (i.e., “Doctora”). However, most MT engines aren’t trained to detect this type of gender bias. Without prompt engineering applied to the source language, the output could be incorrect and impact the quality score.

Image Description: Source Considerations For MT Quality Estimation

Another factor that can affect quality estimations is a lack of clarity or potential for several interpretations of the source text. Mei put it simply: “When the source is ambiguous, and we as humans don't know how to interpret it, we cannot expect machine translation to do a better job than us.”

Additionally, because MTQE models are trained on clean data sets, they don’t always handle messier data well. Profanity is a good example. Mei explained, “When you use profanity words, [quality estimation] models give a very high penalty. They tell you, ‘Hey, this is a bad translation; you shouldn't publish this.’ When you do have use cases for [profanity], you can't use these automatic scoring mechanisms for that.”

Your domain or industry

Different scoring algorithms may give different estimates based on their familiarity with an industry’s terminology. So, Alex emphasized that “there's no one scoring algorithm that's all-encompassing.” He continued, “For an algorithm to be effective, we would need specific data for that domain or that industry.” Just as MT systems can be customized to a particular industry to yield more accurate translations, scoring algorithms can be trained on specific domains as well.

This domain-specific data can often be critical. Alex explained, “If you have a regulated industry like life science, medical, or pharmaceutical, 90% [accuracy], in most cases, probably is not good enough. If, for example, the comma is in the wrong place, and we're talking about using a surgical knife, it literally could be the difference between life or death.” The stakes are also high in other industries, like finance and legal.

The intended audience

Estimations may also vary based on an algorithm’s understanding of quality thresholds for a certain language. Mei said, “Formality—the word choice and voice of your content—falls under your stylistic preferences. But sometimes it's more than preference. It's like, ‘I have to convey this formally; otherwise, I will lose my client.’” Hence the reason manual evaluation can be so beneficial for quality assurance.

Mei continued, “In the case of Spanish where it's not just formal or informal, the word choice really depends on the level of respect you have to pay to the person you're speaking with. And that depends on the relationship you have with the person—if that person is of a more senior rank than you, or is more junior than you.”

The future of machine translation quality and MTQE

Machine translation quality will continue to improve, especially as more people use large language models (LLMs) like GPT-4 to supplement it. Mei made the observation that “these LLMs are very powerful at making corrections to MTs, such as [ensuring] the accuracy of gender, formality, style guides, etc.” However, they do have shortcomings that require linguists to pick up the slack. LLM hallucinations—where models present inaccurate information as fact—are a good example of this.

Ultimately, MT and LLMs will enable translation projects to be completed faster and more accurately. But linguists will remain in the driver’s seat, making adjustments as needed to improve translations. Alex shared a similar sentiment, predicting that translators may eventually take on more of the tasks of a prompt engineer. “They'll begin to learn how to actually write prompts in such a way that the LLM will be able to correct the output and smooth it out to a particular style they need—be it gender, be it formality.”

And what about the future of machine translation quality estimation? A big leap forward will be the creation of algorithms that consider the source and the target. Ideally, they’ll be able to properly weight scores to account for factors like ambiguity and subject matter complexity. Or, at least, improve the process of flagging issues that could negatively impact the target.

In the meantime, though, you already have access to state-of-the-art machine translation engines via Smartling’s Neural Machine Translation Hub. There are even built-in quality assessment features, such as Smartling Auto-Select. (Auto-Select considers the latest edits made to each available machine translation engine and identifies the current best provider for a specific locale pair.)

Image Description: MT Engines Integrated in Smartling NMT Hub

What are the results of this quality estimation-based multi-MT engine approach? Up to 350% higher quality machine translations and a reduced need for post-editing, meaning lower costs and faster time to market.

For more on how Smartling can help you achieve those outcomes, watch our Neural Machine Translation Hub demo. We’ll be happy to answer any questions you have afterward!